Brain-computer interfaces tap AI to enable a man with ALS to speak

Brain-computer interfaces tap AI to enable a man with ALS to speak

Recently, researchers have begun developing speech brain-computer interfaces to restore communication for people who cannot speak.

BY THE CONVERSATION5 MINUTE READ

Brain-computer interfaces are a groundbreaking technology that can help paralyzed people regain functions they’ve lost, like moving a hand. These devices record signals from the brain and decipher the user’s intended action, bypassing damaged or degraded nerves that would normally transmit those brain signals to control muscles.

Since 2006, demonstrations of brain-computer interfaces in humans have primarily focused on restoring arm and hand movements by enabling people to control computer cursors or robotic arms. Recently, researchers have begun developing speech brain-computer interfaces to restore communication for people who cannot speak.

As the user attempts to talk, these brain-computer interfaces record the person’s unique brain signals associated with attempted muscle movements for speaking and then translate them into words. These words can then be displayed as text on a screen or spoken aloud using text-to-speech software.

I’m a reseacher in the Neuroprosthetics Lab at the University of California, Davis, which is part of the BrainGate2 clinical trial. My colleagues and I recently demonstrated a speech brain-computer interface that deciphers the attempted speech of a man with ALS, or amyotrophic lateral sclerosis, also known as Lou Gehrig’s disease. The interface converts neural signals into text with over 97% accuracy. Key to our system is a set of artificial intelligence language models – artificial neural networks that help interpret natural ones.

RECORDING BRAIN SIGNALS

The first step in our speech brain-computer interface is recording brain signals. There are several sources of brain signals, some of which require surgery to record. Surgically implanted recording devices can capture high-quality brain signals because they are placed closer to neurons, resulting in stronger signals with less interference. These neural recording devices include grids of electrodes placed on the brain’s surface or electrodes implanted directly into brain tissue.

In our study, we used electrode arrays surgically placed in the speech motor cortex, the part of the brain that controls muscles related to speech, of the participant, Casey Harrell. We recorded neural activity from 256 electrodes as Harrell attempted to speak.

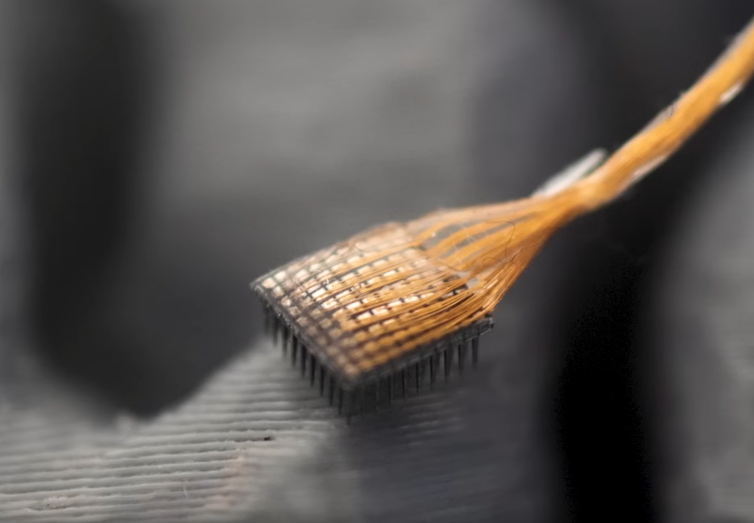

An array of 64 electrodes that embed into brain tissue records neural signals. UC Davis Health

An array of 64 electrodes that embed into brain tissue records neural signals. UC Davis HealthDECODING BRAIN SIGNALS

The next challenge is relating the complex brain signals to the words

WEBSITE : sensors.sciencefather.com

Nomination Link : https://sensors-conferences.sciencefather.com/award-nomination/?ecategory=Awards&rcategory=Awardee

Registration Link :https://sensors-conferences.sciencefather.com/award-registration/

contact as : sensor@sciencefather.com

SOCIAL MEDIA

Twitter :https://x.com/sciencefather2

Blogger : https://x-i.me/b10s

Pinterest : https://in.pinterest.com/business/hub/

Linkedin : https://www.linkedin.com/feed/

#sciencefather #researchaward#Neurotechnology, #AIforGood, #ALSResearch, #AssistiveTechnology, #ArtificialIntelligence, #NeuralInterfaces, #SpeechRestoration, #MedicalInnovation, #BCI, #TechForDisability, #HealthTech, #Neuroscience, #FutureOfMedicine, #HumanAugmentation, #ALSawareness#SensorTechnology, #AIRevolution, #AutomotiveTech, #RobotSensors, #AdvancedSensors #ResearchCoordinator, #PrincipalInvestigator, #ClinicalResearchCoordinator, #GrantWriter, #R&DManager, #PolicyAnalyst, #TechnicalWriter, #MarketResearchAnalyst, #EnvironmentalScientist, #SocialScientist, #EconomicResearcher, #PublicHealthResearcher, #Anthropologist, #Ecologist,

Comments

Post a Comment